GOOGL’s Gemini 3 Hits 130 IQ Score on AI Benchmark

- Here’s the thing – when you look at the numbers, Gemini 3 isn’t just winning by a little. The benchmark results put it at 130 IQ, which sounds impressive until you realize most competing models are clustering somewhere between 110 and 125. That’s a pretty solid margin when you’re talking about these kinds of standardized tests.

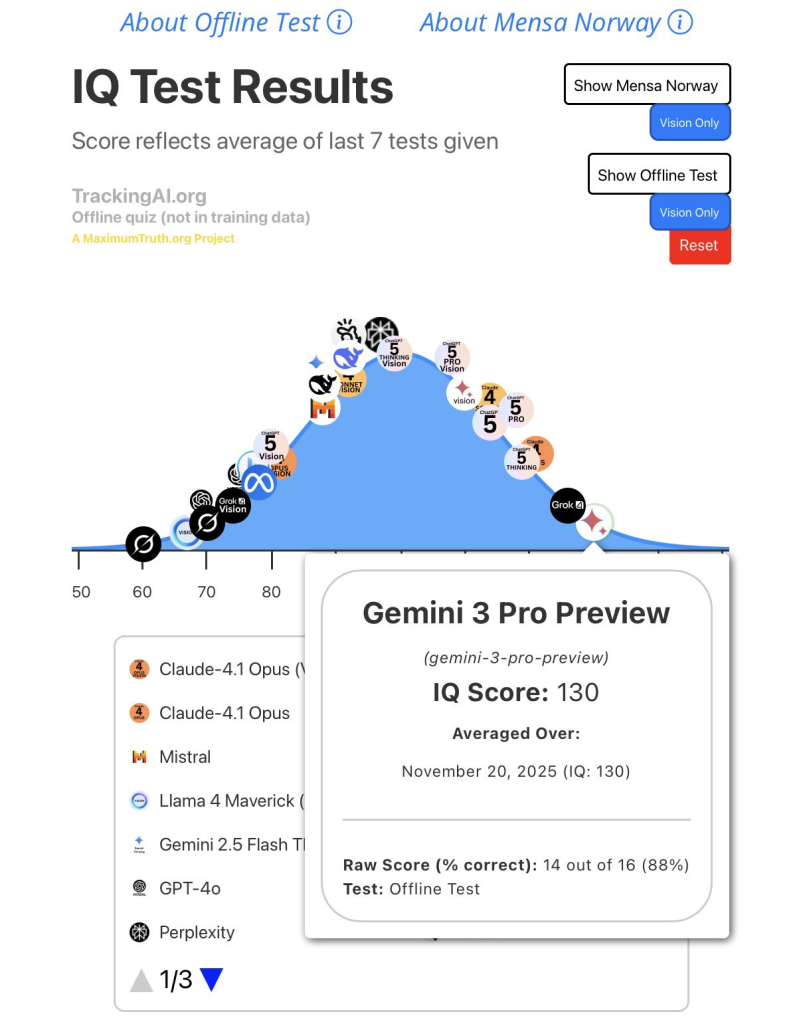

- The data comes from TrackingAI.org’s offline quiz, completed on November 20, 2025, and shown in a bell-curve distribution where Gemini 3 sits right at the top. The test format matters here because it’s designed to stay outside training datasets, which means the model is actually solving problems it hasn’t seen before rather than just regurgitating memorized patterns.

Gemini 3 achieved the highest result among the systems tested, according to data displayed in the chart, which aggregates the average of the last seven offline tests.

- Let’s be real – other heavy hitters like Claude 4.1 Opus, Gemini 2.5 Flash, and Llama 4 Maverick all scored below this new release. The visual layout shows model icons placed according to their performance, and Gemini 3 occupies the highest-ranking spot by a noticeable margin. The 14 out of 16 accuracy rate translates directly to that 130 score, putting Google’s model ahead in what’s becoming an increasingly competitive race.

- What makes this interesting is the timing. We’re seeing capability improvements accelerate across the board, and Google is clearly pushing back against other major AI developers in high-level reasoning tests. As these large-scale models keep expanding their accuracy on standardized benchmarks, the performance hierarchy keeps shifting – and that’s going to influence everything from product rollouts to competitive positioning across both research and commercial applications.

My Take: The 130 IQ score is impressive, but what really matters is whether this translates to real-world performance. Benchmark victories are great for headlines, but users care about practical applications – whether the model can handle complex tasks better than alternatives in actual use cases.

Source: Chubby