Google’s Gemini AI Criticized for Search Accuracy Issues

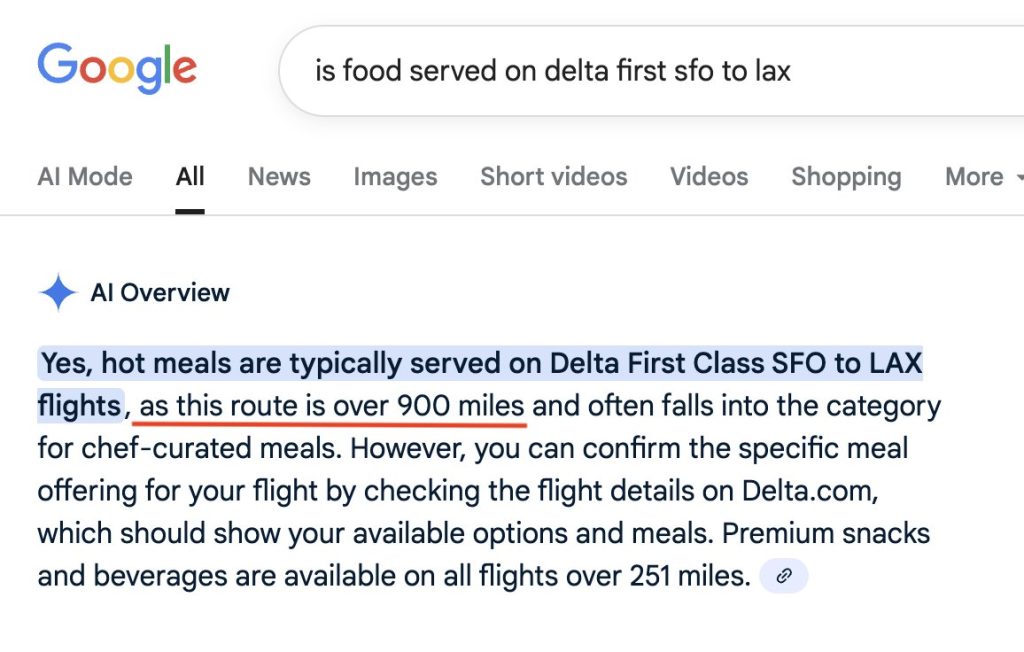

- Venture capitalist Justine Moore went viral criticizing Google’s Gemini AI integration in search. Her main example: Gemini claimed a San Francisco to Los Angeles flight is over 900 miles (it’s actually under 350) and used this wrong distance to incorrectly say the flight offers “chef-curated meals.” Moore warns that deploying such an error-prone AI to millions of users will make people think it’s unreliable.

- Justine Moore, posting as, called out Google for rushing a flawed integration of Gemini AI into search results. Her tweet went viral after showing how Gemini confidently claimed a San Francisco to Los Angeles flight is over 900 miles when it’s actually less than 350. Based on this wrong distance, it incorrectly concluded passengers would get “chef-curated meals.” It’s the kind of basic mistake that raises serious questions about testing.

- Google wants Gemini to deliver quick answers right in search results, but the execution is worrying. When an AI speaks with complete confidence while being completely wrong, it erodes trust fast. As Moore put it, most people encountering these errors will reasonably conclude “that it’s an idiot.”

- Google’s entire business runs on people trusting its search results. If Gemini keeps making obvious mistakes, users might look elsewhere—OpenAI’s ChatGPT, Perplexity, or Microsoft’s Copilot are all ready alternatives. Google may need to slow down, add better fact-checking, or clearly label AI responses.

- This isn’t just a Google problem. Every tech giant is racing to dominate AI search, and they’re all learning the same lesson: moving fast and breaking things works differently when billions of people depend on you for accurate information. For Google, Gemini could either revolutionize search or become a cautionary tale about launching half-baked AI at scale.