ChatGPT Flags Gene Defect After 10+ Years of Medical Testing

After more than ten years of unexplained neurological symptoms and extensive medical testing, a Reddit user credits OpenAI’s ChatGPT with helping surface a potential underlying genetic factor. The case, later highlighted by Simplifying AI, illustrates how large language models may assist in pattern recognition when traditional diagnostics fail to provide clear answers.

Background and Medical History

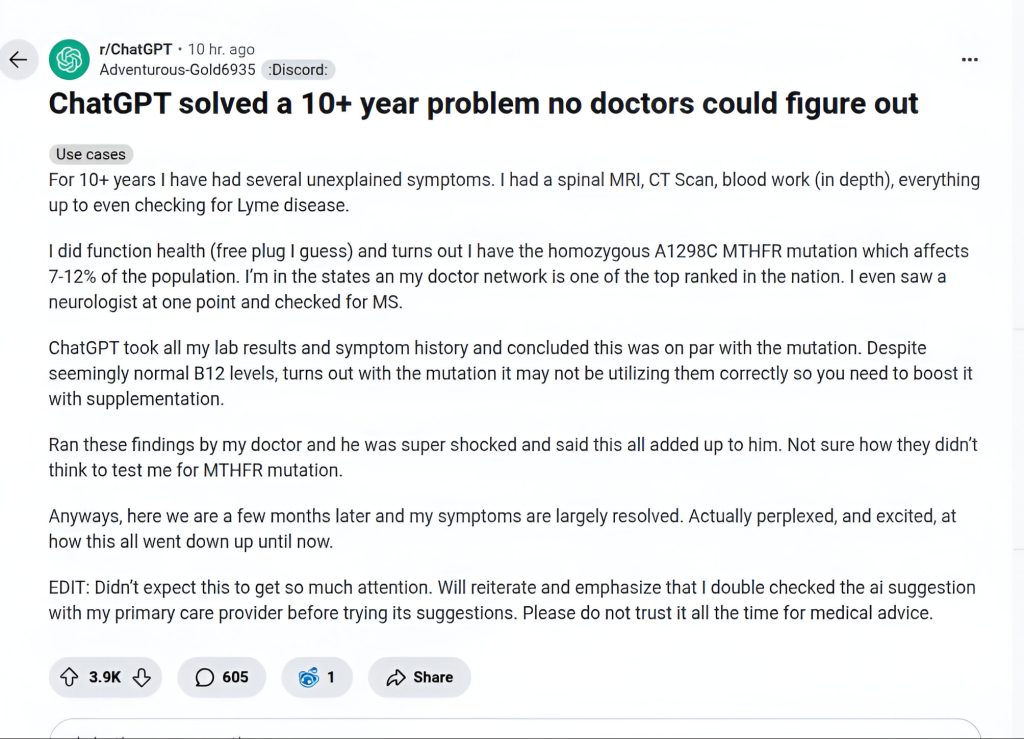

According to the post shared on Reddit, the individual had undergone years of testing to explain persistent neurological symptoms. These evaluations included spinal MRI scans, CT imaging, comprehensive blood work, and screening for conditions such as Lyme disease and multiple sclerosis.

Despite the scope of testing, no definitive diagnosis was reached. Standard lab results, including vitamin B12 levels, consistently appeared within normal ranges, leaving symptoms unexplained and untreated.

AI-Assisted Pattern Recognition

Identification of a Potential Genetic Factor

The turning point came when the user uploaded their full medical history and laboratory results into ChatGPT. Rather than providing a diagnosis, the model identified a recurring pattern related to vitamin B12 metabolism and suggested further investigation into a possible MTHFR gene mutation, specifically the A1298C variant.

ChatGPT highlighted that certain genetic mutations can interfere with how B12 is processed by the body, meaning deficiencies may exist at a functional level even when blood tests appear normal.

As the user emphasized, “ChatGPT did not replace clinical judgment, but helped guide attention toward a factor that could then be properly tested and confirmed through standard medical practice.”

Clinical Confirmation and Outcome

Importantly, the AI-generated insight was treated strictly as a hypothesis. The user reviewed the findings with a primary care physician, who then ordered formal genetic testing. The results confirmed a homozygous MTHFR A1298C mutation.

Under medical supervision, the individual began targeted treatment and supplementation. After several months, long-standing symptoms—including brain fog and tingling sensations—were reported to have largely resolved.

Why This Case Matters

This example highlights the potential role of AI tools as analytical assistants rather than diagnostic authorities. By synthesizing complex medical histories and lab data, large language models may help surface overlooked possibilities that warrant further clinical investigation.

At the same time, the case underscores clear limitations. AI systems cannot replace professional medical judgment, and all decisions regarding diagnosis and treatment must remain firmly grounded in clinical expertise.

Broader Implications for AI in Healthcare

The story reflects a growing interest in how AI may support decision-making in healthcare settings, particularly in complex or long-running cases. While such tools can enhance pattern recognition and hypothesis generation, they must operate within a framework that prioritizes physician oversight, validated testing, and patient safety.

As the original poster cautioned in an update, AI-generated insights should never be acted upon without consultation with qualified healthcare professionals.