AI Infrastructure Surges Toward 40 GW by 2030

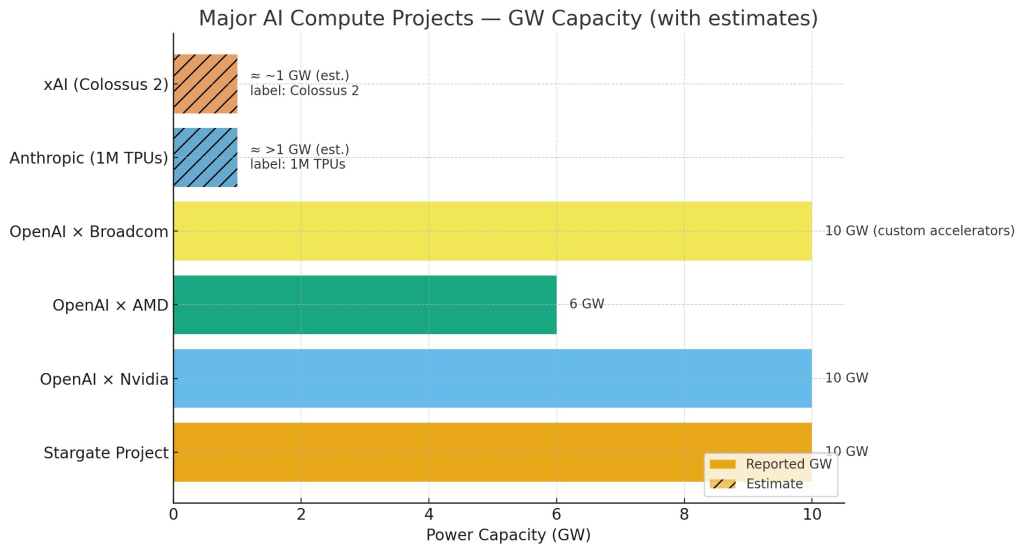

- A recent analysis from Haider reveals just how big AI infrastructure is getting. The world’s top AI labs are planning to deploy more than 40 gigawatts of compute capacity by 2030. To put that in perspective, we’re talking about power consumption equivalent to multiple small countries — all dedicated to running artificial intelligence.

- This represents a fundamental shift in how we use energy. Projects like Stargate (10 GW), OpenAI’s partnerships with Nvidia, AMD, and Broadcom (26 GW combined), Anthropic’s million-TPU initiative (over 1 GW), and xAI’s Colossus 2 (around 1 GW) are operating at the scale of traditional power grids. Instead of lighting up cities, though, they’re powering machine intelligence.

- But it’s not without challenges. Supply chain constraints, chip shortages, and the massive carbon footprint and cooling requirements of these high-density facilities pose real risks.

- The economics are staggering too. A single 10 GW compute campus can cost $100 billion or more, covering everything from chip production to renewable energy integration and data center construction. OpenAI has spread its bets across multiple hardware providers to avoid getting stuck if one supply chain hits problems.

- This buildout is basically industrializing AI — merging energy, tech, and policy into a single global system. Governments are figuring out how to expand power grids and regulate these energy-intensive facilities, while companies compete for renewable energy contracts and land for massive data center campuses.