Space GPUs Could Cut Costs to $1,000 Per Unit vs $10,000 on Earth

A fresh analysis of AI infrastructure costs suggests something remarkable: running Nvidia GPUs in orbit may eventually become cheaper than operating them in traditional Earth-based data centers.

The key variable is launch pricing. If rocket costs fall fast enough, orbital deployment could undercut the rising expenses of terrestrial power, cooling, and electrical infrastructure.

Earth-Based Data Center Costs

Today, hosting AI GPUs on the ground is capital-intensive. Beyond the hardware itself, operators must invest heavily in:

- Solar generation or grid power infrastructure

- Battery storage systems

- Electrical distribution equipment

- Cooling systems

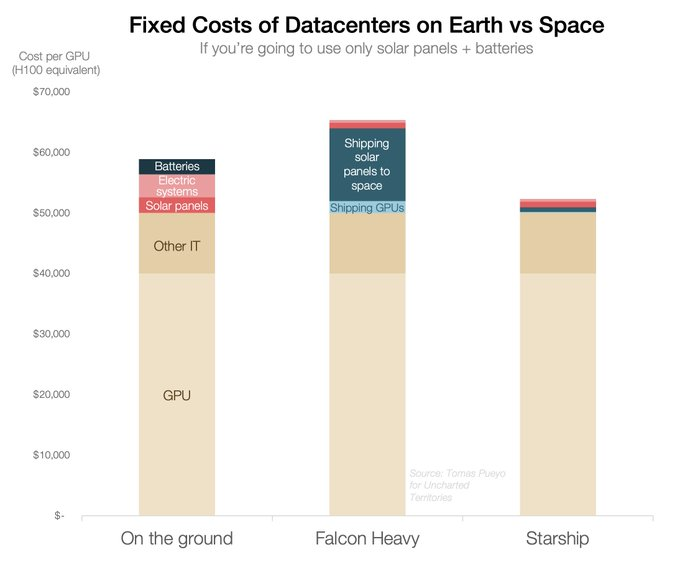

Estimates show that infrastructure tied to solar and battery setups alone can total roughly $10,000 per GPU before long-term operating costs are even considered.

This pressure is already visible across the industry. As covered in Finly’s report on AI data center cost explosion, infrastructure spending is accelerating rapidly.

That analysis highlights projections suggesting AI data center investments could reach $8 trillion, with infrastructure expenses in many cases rising faster than hardware costs.

Orbital Hosting: The Cost Equation Flips

In orbit, the dominant cost shifts from energy infrastructure to transportation. Using Falcon Heavy, which costs approximately $1,500 per kilogram, launching a GPU plus supporting solar array (about 10 kg total) would cost around $15,000 per unit. At this level, orbital deployment is comparable to Earth-based infrastructure costs.

However, if Starship reaches its long-stated goal of $100 per kilogram, the economics change dramatically:

- 10 kg payload × $100/kg = $1,000 per GPU

Compared to roughly $10,000 in terrestrial infrastructure costs, that implies orbital hosting could become 10 times cheaper.

Why This Matters for Markets

Infrastructure economics are already influencing stock performance and capital allocation.

For example, Finly recently reported on NVDA news on data center funding, where a failed $10B AI infrastructure deal had immediate market impact. That episode underscored how sensitive cloud and AI players are to funding and infrastructure costs.

If orbital compute becomes viable—even for a portion of high-value AI workloads—it could:

- Reduce long-term energy expenditures

- Reshape data center funding models

- Change capacity planning strategies

- Influence deployment demand for Nvidia GPUs

Given Nvidia’s dominance in AI training workloads, any structural shift in where compute is hosted could have meaningful implications for the broader AI ecosystem.

Technical Challenges and Timeline

Despite the compelling economics, major technical hurdles remain:

- Thermal management in vacuum

- Radiation shielding

- Maintenance logistics in orbit

- Reliable long-duration power systems

Starship must consistently achieve ultra-low launch costs, and orbital infrastructure must prove operationally viable before large-scale deployment becomes realistic.

The timeline remains uncertain—but the financial case is strengthening.

Outlook

Space-based data centers may sound futuristic, yet the economics are beginning to align. As power and cooling costs rise on Earth and launch prices fall, orbital compute could emerge first for specialized AI workloads where energy efficiency is critical.

If launch costs continue declining, the conversation around AI infrastructure may shift from whether space hosting is viable to when it becomes commercially competitive.

Source: Twitter post by Tomas Pueyo